Huawei announced that its TensorFlow and PyTorch style MindSpore Deep Learning middleware is now open source. Find out in this article its most important characteristics.

Huawei has just announced that its MindSpore framework for developing AI applications is becoming open source and available on GiHub and Gitee. MindSpore is another Deep Learning framework for training neural network models, similar to TensorFlow or PyTorch, designed for use from Edge to Cloud, which supports both GPUs and obviously Huawei Ascend processors.

Last August, when Huawei announced the official launch of its Ascend processor, MindSpore was first introduced, stating that “in a typical ResNet-50 based training session, the combination of Ascend 910 and MindSpore is about twice as fast. When training AI models versus other major learning cards using TensorFlow It is true that many frameworks have emerged in recent years, and perhaps MindSpore is nothing more than one group that can even remotely compete with TensorFlow (backed by Google) and PyTorch (supported by Facebook).

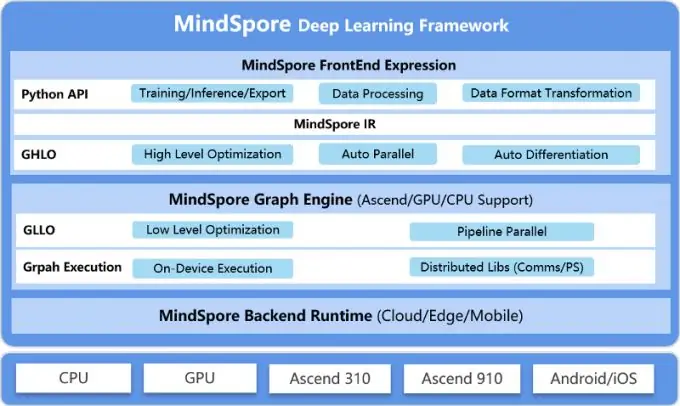

System architecture

The MindSpore website describes that the infrastructure consists of three main layers: frontend expression, graphics engine, and backend runtime. The following figure shows a visual diagram:

The first level of MindSpore offers Python APIs for programmers. Since language linguistics in our community is de facto Python, and otherwise MindSpore wants to compete with PyTorch and TensorFlow. With this API, programmers can manipulate models (training, inference, etc.) and process data. This first level also includes support for code interim representation (MindSpore IR), on which many optimizations will be based that can be performed in parallelization and automatic differentiation (GHLO).

Below is the Graph Engine layer, which provides the necessary functionality to create and execute automatic differentiation of the execution graph. With MindSpore, they opted for an automatic differentiation model other than PyTorch (which creates a dynamic execution graph) or TensorFlow (although the option to create a more efficient static execution schedule was originally chosen, it now also offers a dynamic execution graph option and allows a static version of the graph with using the @ tf.function decorator of its low-level API).

MindSpore's choice is to convert the source code to intermediate code format (MindSpore IR) to take advantage of the two models (for more information, see the “Automatic Separation” section on the MindSpore website).

The final layer consists of all the libraries and runtime environments needed to support the various hardware architectures in which the code will be processed. Most likely, it will be a backend very similar to other frameworks, perhaps with Huawei features, such as libraries like HCCL (Huawei Collective Communication Library), equivalent to NVIDIA NCCL (NVIDIA Collective Communication Library).

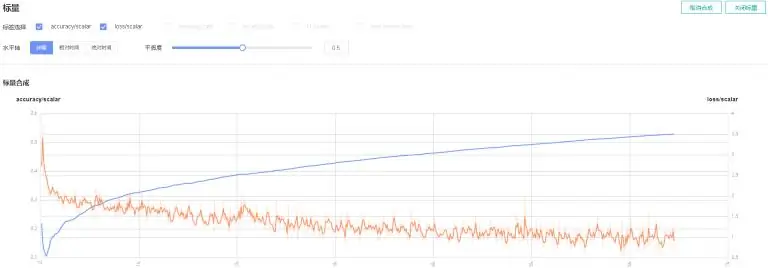

Training visualization support

According to the MindSpore tutorial, although it was impossible to install and use them, they have MindInsight to create visualizations that are somewhat reminiscent of TensorBoard, TensorFlow. Take a look at some screenshots they show on their website:

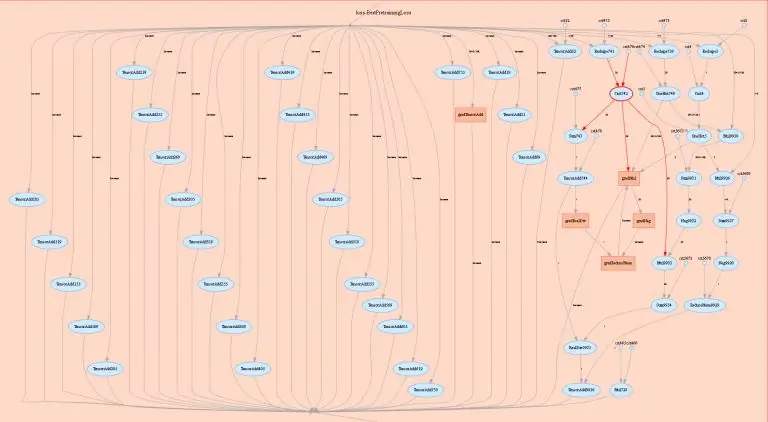

According to the manual, MindSpore currently uses a callback mechanism (reminiscent of how it is done with Keras) to write (in a log file) in the process of training all those model parameters and hyperparameters we want, as well as the computation schedule when compiling neural network into intermediate code is complete.

Parallelism

In their tutorial, they talk about the two parallelization modes (DATA_PARALLEL and AUTO_PARALLEL) and present a sample code that trains ResNet-50 with a CIFAR dataset for an Ascend 910 processor (which I was unable to test). DATA_PARALLEL refers to a strategy commonly known as data parallelism, which consists of dividing training data into multiple subsets, each of which runs on the same replica of the model, but in different processing units. Graph Engine support is provided for code parallelization and in particular for AUTO_PARALLEL parallelism.

AUTO_PARALLEL mode automatically optimizes parallelization by combining the data parallelization strategy (discussed above) with the model parallelization strategy, in which the model is divided into different parts, and each part is executed in parallel in different processing units. This automatic mode selects the parallelization strategy that offers the best benefits, which can be read about in the Automatic Parallel section on the MindSpore website (although they do not describe how estimates and decisions are made). We'll have to wait to make time for the technical team to expand the documentation and understand more details about the auto-parallelization strategy. But obviously this auto-parallelization strategy is critical and this is where they should and can compete with TensorFlow or PyTorch, getting significantly better performance using Huawei processors.

Planned roadmap and how to contribute

Obviously there is a lot of work to be done and at this point they have streamlined the ideas they have in mind for next year in the extensive roadmap presented on this page, but they argue that priorities will be adjusted according to the user.

Feedback. At the moment we can find these main lines:

- Support for more models (pending classic models, GAN, RNN, Transformers, amplified learning models, probabilistic programming, AutoML, etc.).

- Extend APIs and libraries to improve usability and programming experience (more operators, more optimizers, more loss functions, etc.)

- Comprehensive Huawei Ascend processor support and performance optimization (compilation optimization, resource utilization improvement, etc.)

- Evolution of the software stack and implementation of computational graph optimizations (improving the intermediate IR representation, adding additional optimization capabilities, etc.).

- Support for more programming languages (not just Python).

- Improved distributed learning with optimization of automatic scheduling, data distribution, etc.

- Improve the MindInsight tool to make it easier for the programmer to "debug" and improve hyperparameter tuning during the learning process.

- Progress in delivering inference functionality to devices in Edge (security, support for non-platform models via ONNX, etc.)

On the community page, you can see that MindSpore has partners outside of Huawei and China, such as the University of Edinburgh, Imperial College London, University of Munster (Germany) or Paris-Saclay University. They say they will follow an open governance model and invite the entire community to contribute to both the code and the documentation.

Conclusion

After a quick first glance, it seems like the right design and implementation decisions (like concurrency and automatic differentiation) can add room for improvements and optimizations that achieve better performance than the frameworks they want to outperform. But there is still a lot of work ahead to catch PyTorch and TensorFlow, and above all build a community, not just! However, we all already know that with the backing of one big company in the sector like Huawei, anything is possible, or it was obvious three years ago when the first version of PyTorch (Facebook) came out that it could be close to the heel of TensorFlow (Google)?